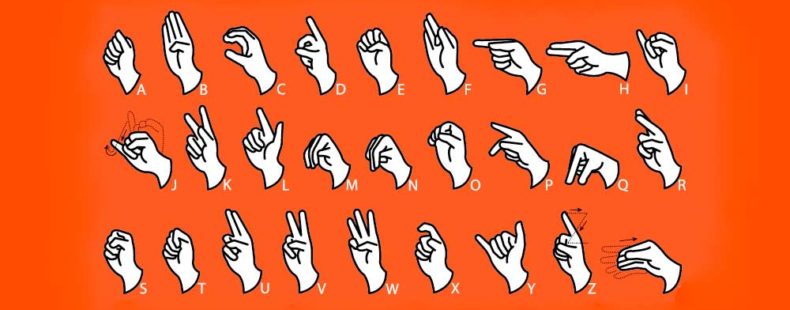

American sign language classifier from scratch [99.63% accurate]

Deep learning based image classifier written from scratch using PyTorch and some of the Fastai functions

from fastai.vision.all import *

import pandas as pd

from os import path

torch.cuda.set_device(0)

torch.set_default_tensor_type('torch.cuda.FloatTensor')

if path.exists('/storage'):

# Paperspace

train_df = pd.read_csv('/storage/data/asl/sign_mnist_train.csv')

test_df = pd.read_csv('/storage/data/asl/sign_mnist_test.csv')

elif path.exists('/Users/vinay/Datasets/'):

# Local

train_df = pd.read_csv('/Users/vinay/Datasets/asl/sign_mnist_train.csv')

test_df = pd.read_csv('/Users/vinay/Datasets/asl/sign_mnist_test.csv')

else:

# GCP

train_df = pd.read_csv('/home/jupyter/datasets/asl/sign_mnist_train.csv')

test_df = pd.read_csv('/home/jupyter/datasets/asl/sign_mnist_test.csv')

# Randomly select 20% of training data as validation data

valid_df = train_df.sample(frac=0.2)

train_df = train_df.drop(valid_df.index)

train_df.shape, valid_df.shape, test_df.shape

The training data is arranged such that each row in the dataframe consists of an image and its corresponding label

def get_labels(df):

return tensor(df.iloc[:, 0].values).to('cuda')

# Select all the pixel intensities and convert into a 1-D tensor of values between 0 to 1

def get_image_tensors(df):

return torch.stack([tensor(image_array)/255. for image_array in df.iloc[:, 1:].values]).to('cuda')

def get_dataset(df):

return zip(get_image_tensors(df), get_labels(df))

dataset = get_dataset(train_df)

labels = get_labels(train_df)

for image_tensor, label in list(dataset)[:2]:

print(label)

show_image(torch.reshape(image_tensor, (28, 28)), cmap='gray')

Generate random weights between -1 and 1 and mark them to calculate gradient by setting .requires_grad_() Autograd engine will track all the transformations/functions applied to weights in a graph structure (The leaves of this graph are input tensors and the roots are output tensors) and calculates the derivates when .backward() is called on the weights tensor.

def get_random_weights(rows, cols):

return torch.randn(rows, cols).to('cuda').requires_grad_()

# pytorch equivalent

parameters_py = nn.Linear(28*28, len(train_df.label.unique())+1)

parameters_py

weights = get_random_weights(28*28, len(train_df.label.unique())+1)

bias = get_random_weights(1, len(train_df.label.unique())+1)

parameters = (weights, bias)

weights.shape, bias.shape

To find predictions we need to multiply each weight with correponding pixel intensity, calculate the sum and add the bias this operation can be done without any loops by matrix multiplication

image_tensors = get_image_tensors(train_df)

image_tensors.shape, weights.shape

def get_predictions(image_tensors, weights, bias):

return image_tensors@weights + bias

predictions = get_predictions(image_tensors, weights, bias)

predictions.shape

Predictions with random weights are around ~5% accurate, lets try to optimise them

def accuracy(df, parameters):

weights, bias = parameters

test_image_tensors, test_labels = get_image_tensors(valid_df), get_labels(valid_df)

test_preds = get_predictions(test_image_tensors, weights, bias)

pred_classes = torch.argmax(test_preds, dim=1)

return (pred_classes == test_labels).float().mean()

accuracy(valid_df, parameters)

A loss function is a measure of how good your prediction model is in terms of being able to predict accurately. We turn the learning problem into an optimization problem by defining a loss function and optimise the parameters to minimise the loss

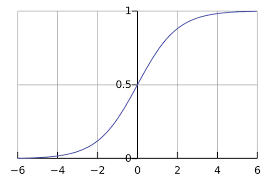

A softmax function turns predictions into probabilities, each value is bounded between (0, 1). Our model considers 0.900 and 0.999 as the same but the second prediction is 100 times more confident hence we use log to amplify the domain to (-inf, inf)

def softmax(predictions, log=False):

if log:

return torch.log(torch.exp(predictions)/torch.exp(predictions).sum(dim=1, keepdim=True))

return torch.exp(predictions)/torch.exp(predictions).sum(dim=1, keepdim=True)

# pytorch equivalent

activations_py = F.log_softmax(predictions, dim=1)

activations = softmax(predictions, log=True)

loss_of_each_image = activations.gather(1, labels.unsqueeze(-1))

max(loss_of_each_image), min(loss_of_each_image), loss_of_each_image.shape

We can observe a problem here, If we are taking log after softmax we get loss as infinity to avoid that we use F.log_softmax() function provided by PyTorch

torch.log(F.softmax(tensor([62.0, -51.0]), dim=0)), F.log_softmax(tensor([62.0, -51.0]))

We now have 25 probabilities corresponding to 25 categories for each image, we select the value according to it class it belongs and take the mean of all the training images to calculate the loss.

list_of_lists = tensor([[1, 2], [2, 5]])

index = tensor([0, 1])

list_of_lists.gather(1, index.unsqueeze(-1))

loss = activations_py.gather(1, labels.unsqueeze(-1)).mean()

# pytorch equivalent

loss_py = -F.nll_loss(activations_py, labels)

loss, loss_py

def cross_entropy_loss(predictions, labels):

activations = F.log_softmax(predictions, dim=1)

loss = activations.gather(1, labels.unsqueeze(-1)).mean()

return -loss

# pytorch equivalent

cross_entropy_loss_py = F.cross_entropy(predictions, labels)

cross_entropy_loss(predictions, labels), cross_entropy_loss_py

def train_epoch(dataloader, parameters, learning_rate, accuracy_func, loss_func, valid_df, epoch_no):

for image_tensors, labels in dataloader:

weights, bias = parameters

preds = get_predictions(image_tensors, weights, bias)

loss = cross_entropy_loss(preds, labels)

loss.backward()

weights.data = weights.data - weights.grad*learning_rate

bias.data -= bias.grad*learning_rate

weights.grad.zero_()

bias.grad.zero_()

accuracy = accuracy_func(valid_df, (weights, bias))

if epoch_no%5 == 0:

print(f'epoch={epoch_no}; loss={loss}; accuracy={accuracy}')

return weights, bias

def train(parameters, no_of_epochs, learning_rate, batch_size):

for i in range(1, no_of_epochs+1):

dl = DataLoader(get_dataset(train_df), batch_size=batch_size)

weights, bias = train_epoch(dl, parameters, learning_rate, accuracy, cross_entropy_loss, valid_df, i)

train(parameters, no_of_epochs=50, learning_rate=1e-1, batch_size=2048)

We started with random parameters whos predictions are ~4% after training for 50 epochs I was able to achieve 28% accuracy, lets train for some more epochs

train(parameters, no_of_epochs=50, learning_rate=1e-1, batch_size=1024)

I'm trying to play with batch_size and learning rates to see how they effect the traning speed

train(parameters, no_of_epochs=50, learning_rate=2e-1, batch_size=512)

Bumping up the learning rate indeed improved the optimisation speed, let train for some more epochs

train(parameters, no_of_epochs=50, learning_rate=2e-1, batch_size=512)

Lets try with a larger batch size and learning rate, maybe it generalises well?

train(parameters, no_of_epochs=50, learning_rate=3e-1, batch_size=1024)

train(parameters, no_of_epochs=50, learning_rate=2e-1, batch_size=512)

As we are now a bit above 95% accuracy, let try to take smaller steps to prevent overshooting

train(parameters, no_of_epochs=50, learning_rate=1e-1, batch_size=1024)

Can we get more out the single layer NN?

train(parameters, no_of_epochs=50, learning_rate=1e-1, batch_size=2048)

train(parameters, no_of_epochs=50, learning_rate=1e-1, batch_size=256)

Seems to me that having lower batch size is better?

train(parameters, no_of_epochs=50, learning_rate=1e-1, batch_size=256)

I never assumed we could get 98.9% accurate model with just 1 layer, lets test the accuracy with test data

accuracy(test_df, (weights, bias))

train(parameters, no_of_epochs=50, learning_rate=1e-1, batch_size=256)

train(parameters, no_of_epochs=50, learning_rate=1e-1, batch_size=512)

accuracy(test_df, (weights, bias))

Yea! its around 99.6% accurate. I still tried to train for more epochs but seemed to overfit (loss imporves but the accuracy decreases)